Scientists have been promised a front-row seat for the formulation of the EU’s proposed AI regulatory structures. They should seize this opportunity

Scientists have been promised a front-row seat for the formulation of the EU’s proposed AI regulatory structures. They should seize this opportunity to bridge some big gaps.

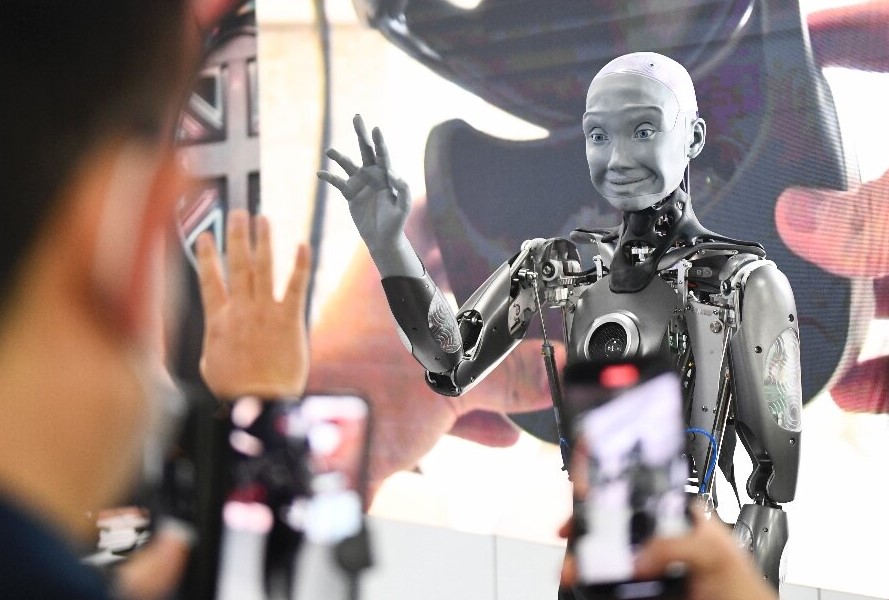

Late last year, the European Commission announced its long-awaited AI Act, which “aims to address risks to health, safety and fundamental rights” resulting from the applications of artificial intelligence -AI-, reported Nature.

The act creates new regulatory arrangements in all 27 European Union member States. There will also be a new ‘AI Office’ attached to the European Commission, after the act is approved by the European Parliament and the European Council (which comprises member States’ heads of Government).

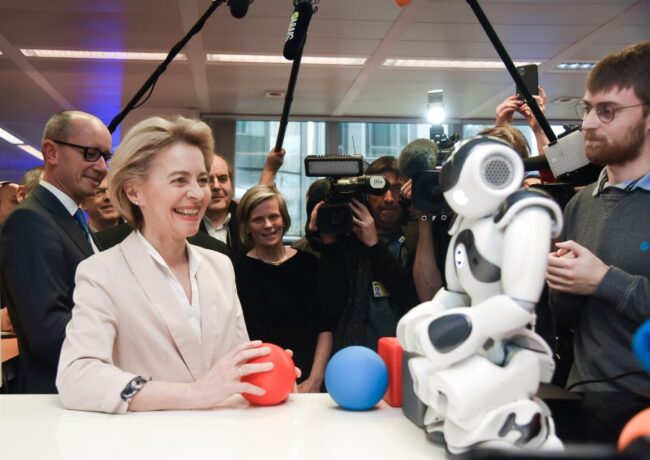

The AI Office will “enforce and supervise” rules, such as those that will apply to applications including ChatGPT. It will also, the commission says, have “a strong link with the scientific community”. This opens a door for researchers to help shape what promises to be one of the world’s most comprehensive set of laws and regulations on AI, reported Nature.

Researchers need to seize this opportunity, and quickly. There are holes in the act that need to be filled before it enters into full force, which is expected to happen in around two years’ time.

Among those who have identified gaps are researchers studying the intersection of technology, law and ethics. To take one example, the act assumes that most AI carries “low to no risk”. This implies that many everyday AI applications (such as online chatbots that answer simple queries, and text-summarizing software) will not need to be submitted for regulation, reported Nature.

Applications considered ‘high-risk’ will be regulated, and include those that use AI to screen candidates for jobs or to carry out educational assessments, and those used by law enforcement.

But as Lilian Edwards, a legal scholar at Newcastle University, UK, points out in a report for the Ada Lovelace Institute in London, there are no reviewable criteria to support the act’s low- and high-risk classifications. Furthermore, where is the evidence that most AI is low-risk?

A second concern is that AI developers will, in many instances, be able to self-assess products deemed high-risk. Under the act, such providers will need to explain the methodologies and techniques used to obtain training data, including where and how those data were acquired, and how the data were cleaned, as well as confirming that they comply with copyright laws, reported Nature.

The regulator should ideally establish an independent, third-party verification system that can also verify raw data when necessary, even if it checks only a representative sample. Once established, the AI Office needs to make good on the commission’s pledge to work closely with the scientific community, harnessing all available expertise to provide answers to these questions.

The regulation of new technologies is an unenviable, but essential, task, said Nature.

Governments need to support innovation, but they also have a duty to protect citizens from harm and ensure that people’s rights are not violated. Lessons learnt from the regulation of existing technologies, from medicines to motor vehicles, include the need for maximum possible transparency, for example, in data and models.

Moreover, those responsible for protecting people from harm need to be independent of those whose role is to promote innovation, reported Nature.

Hadrien Pouget, who studies AI ethics at the Carnegie Endowment for International Peace in Washington DC, and his colleague Johann Laux at the University of Oxford, UK, have highlighted the necessity of regulatory independence, as well as transparency from AI providers, in an open letter to the future AI Office.

Meanwhile, the AI Advisory Body convened by United Nations secretary-general Antonio Guterres is urging all those working on AI regulation to listen to as diverse a range of voices as possible in the process, reported Nature.

The EU, to its credit, has much experience of drawing on natural and social science, along with engineering and technology, business and civil society, in its law-making.

The European Union needs to ensure that it draws on all of that experience in its AI work. Researchers have a small window in which to fix the gaps in the EU’s plans. They need to jump in before it closes, as reported by Nature.

All Credit To: Nature, Nature.com/2nd PHOTO: medtech.citeline

COMMENTS